Layoffs! Recently, Watson Health, the oldest brother in the field of artificial intelligence health, has heard about 70% of layoffs, which has attracted much attention from the industry. Some financial analysts even call this department a bottomless pit for burning money.

So, how can you build a more rational and pure thinking framework under the AI ​​boom? This time, Prof. Xing Bo can thoroughly explain the boundary issue of AI.

Let's go to artificially create a lie. After the last one is punctured, it is the honest people who work to take punishment. This is very unfair to R&D personnel.

-- Xing Bo

Xing Bo, who studied under the control of a master academic fellow Michael Jordan, Director of the Machine Learning and Medical Center at Carnegie Mellon University, was also a Ph.D. candidate in biochemistry and computer science, and founded the general machine learning platform Petuum and obtained Softbank investment.

Challenge Facebook's 100 million users

One not "decent" experience

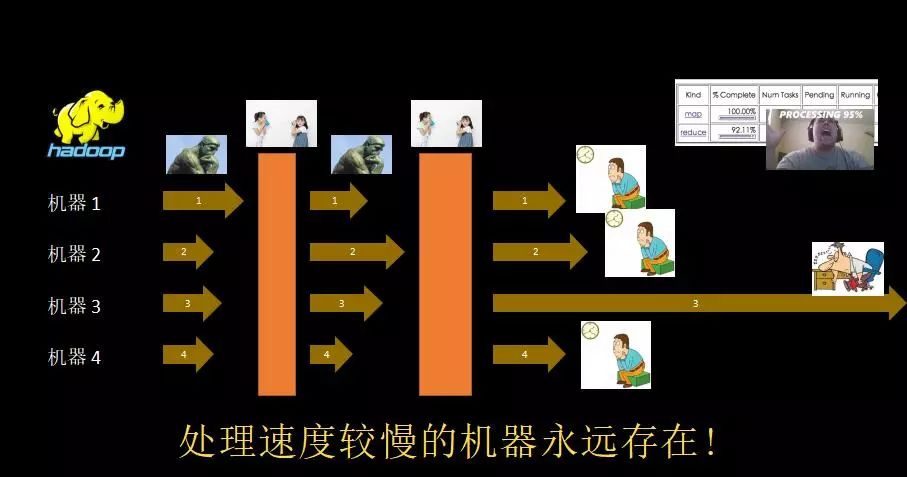

Today's artificial intelligence is facing a very very practical engineering problem - scale bottleneck:

Your algorithm can be implemented on a stand-alone machine in the lab. It may not work in the real environment.

I talk about my experience on Facebook.

Around 2011 or so, we did a more successful social modeling -

Relying on a database of Hollywood stars with 1 million people, people can be classified and accurately recommended.

But when I deployed this model on Facebook's 100 million users, the results were quite disappointing:

In principle, 1 million can be counted in 6 minutes. If there are 1000 machines, then 0.6 minutes can be calculated, but the result?

Don't say 0.6 minutes, 1 week is not finished, the middle card.

>>>>

There is nothing wrong with the algorithm. There is nothing wrong with modeling. There are machines, but why is it still a card?

Commanding 1,000 troops is not the same as directing one person. When you put the algorithm on 1000 machines, it involves a problem of communication between machines.

For example, machine learning requires recursive iterative iterations. Each iteration needs to hold one hand. Tell me that the iteration is complete, or that it hasn't been iterated. You have to wait, and once is a threshold. When you have several machines iterating, their speed is different. Every time you wait until the last machine is completed, you can go forward.

So, this involves a very critical issue:

Machine learning as an algorithm theory tool, as a model, has an important connection with its computing devices. New AI programs require new AI engines, just as new aircraft designs must have new engines to boost.

As a mature artificial intelligence scholar, not only to become an algorithm expert or modeling expert, you can't throw live to programmers to let them realize, but to have a deeper understanding of tasks and working environment.

For example, artificial intelligence has a very strong purpose in project implementation, and the operating mode is actually a minor factor.

For example, if you want to perform an air show with a complete formation, you do not have to fly too fast, which means that you can lose efficiency. However, if the aircraft is to fight fire, the flight attitude does not have to be particularly accurate in order to be able to exchange efficiency.

Nuggets AI

AI architects must think about 9 key issues

>>>>

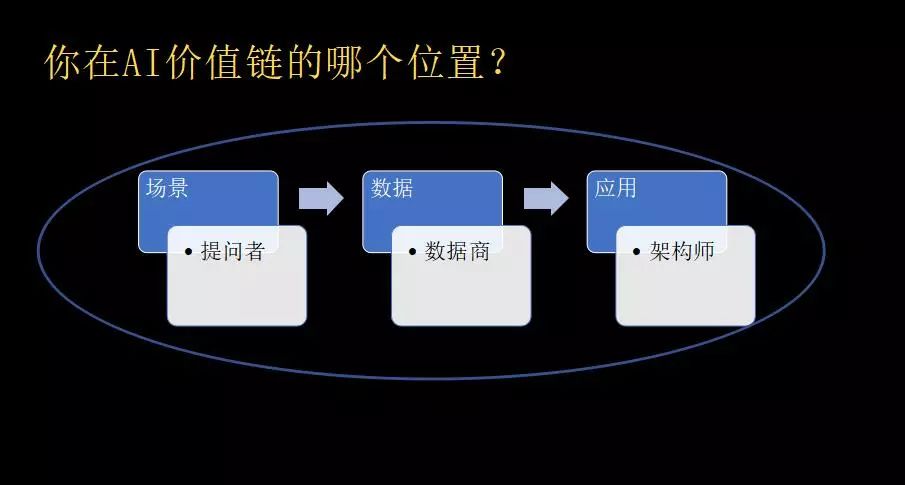

How to grasp the future opportunities of AI?

Let's start with a story. In the early days of the United States, many people went to California to rush for gold, but in fact they did not make much money by making a rich investment. In the end, the real rich people, I remember two people:

Levi Strauss, who made jeans, at that time, he made equipment and clothes for some workers;

Sam Brennan, who made the tool iron, because every gold digger needs a shovel.

Similarly, AI has a lot of landing scenes: You can generate products or generate services from data, and also provide AI developers or users with the tools they need.

So, you can think about it well. What is your chance at the end of the AI ​​value chain?

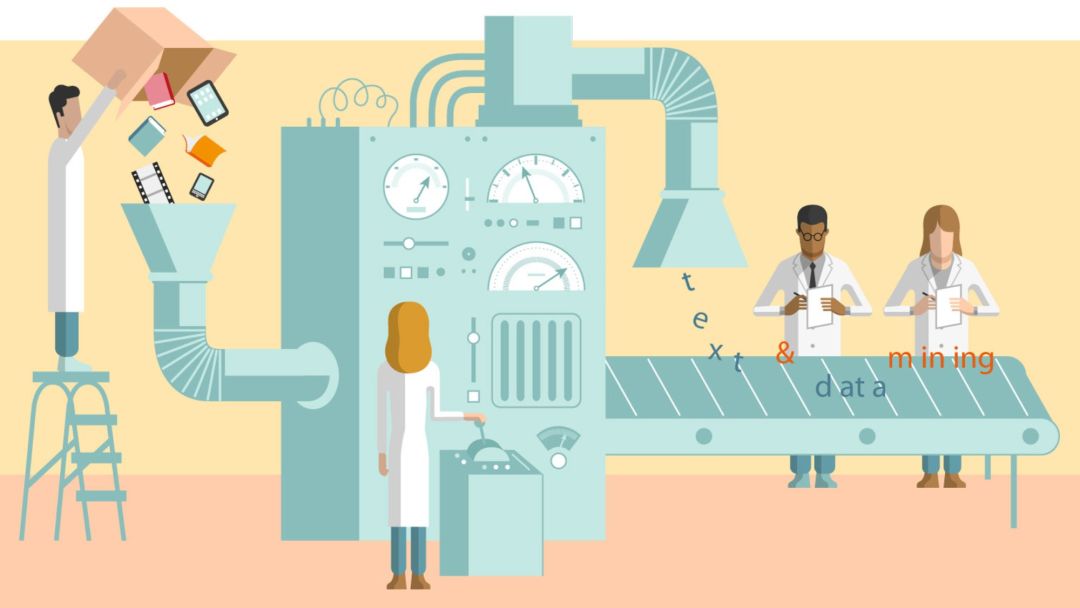

Nowadays, the biggest bottleneck faced by tech companies' artificial intelligence solutions is that they can't be created and copied at a controlled cost, like commodities.

Therefore, to achieve a reliable, useful, and usable AI, it must be made across the workshop, using a standardized industrial production model. It's like making a car, first make the parts, then create a supply chain, and finally make an assembly.

We hope that through this attempt, artificial intelligence will be promoted from the unique secrets and black technologies to the direction of engineering:

Making it less dependent on language, device dependence, and interface dependence, allowing different people to use what they want, like we use electricity or using Microsoft's word tools, instead of saying that we need to hire a Chief AI Officer, or Hired a lot of doctors to do hands-on research and development.

>>>>

In short, a mature AI architect must learn to think about the following 9 key issues:

1 Build a complete, engineeringly credible solution, not a toy or Demo;

Moreover, this system can be plugged in and unplugged, and it is a plug-in platform. Just like you are a car production line, product upgrades, replacement parts or replacement parts can be done without having to tear down the entire product line.

2 Reproducibility;

Others can also use it, but they can also do it.

3 economic feasibility;

4 Applicable to the special conditions of various users;

For example, if Boeing produces aircraft, is it necessary to build a different aircraft for each user? No, basic aircraft engines, airframes, operating software, etc. can be provided.

In other words, both the user's individual needs and the solutions provided by the manufacturer must meet in the middle rather than at one end. This is a design idea that is still relatively lacking in artificial intelligence.

5 results are reproducible;

6 understand how the solution is constructed;

7 Explainability, especially when unexpected results occur. Know where something went wrong.

8 can exchange results, so that everyone can also reproduce your claimed results;

9 Clear what can be done and what not.

For example, I can tell you that I am not prepared to ride a self-driving car within 5 years, or I strongly urge that a self-driving car has a steering wheel. I have to hold it myself. Why?

Because as a developer, I am very aware that many algorithms now include causality in deep learning algorithms, and it is not clear.

Will artificial intelligence surpass humanity?

Be wary of harm to the discipline

Many people think that the development of artificial intelligence will achieve an exponential explosion just like Moore's Law, but the reality is that Moore's Law has a solid data base and artificial intelligence is not.

The development of artificial intelligence is bounded and eventually converges. Moreover, the place where it converges is not in human intelligence, and it may even be backwards.

For example, recently, Elon Musk, who has always been keen on aggressively deploying robot factories, acknowledged on Facebook that after factory automation, efficiency has not improved, but has declined.

>>>>

So what can't AI do? There are 3 aspects:

1 machine can not ask questions;

People are very good at challenging unknowns and asking questions, but for machines, it raises an unknown problem (not based on knowledge of the content of the book, to mention the quiz question), and discovers a new physical theorem and mathematical theorem. This is very, very Difficult, I do not believe that any algorithm or model can do this in the foreseeable future.

2 machines also cannot know the unknown problem;

There is a saying that one of the criteria for people's cultivation and learning is whether you know the unknown or not, and the machine does not know.

Because the machine has no feeling of boundary, it does not know beyond its knowledge, there is something else it does not know, this is a function that cannot be set.

3 Machines are difficult to learn from small data and still require manual architecture and tuning.

People learn to start learning through small data. They rely on a comprehensive approach of environment, prior knowledge, and logical thinking to acquire knowledge from different data. Then they can integrate and grow.

This is where artificial intelligence is rather weak, and even if it can be achieved, at the engineering level, its performance is quite unstable.

It needs to have very, very radical adjustments. It is a very mysterious and unique secret weapon. It has all kinds of secrets knocking on the door, which has hindered the commercialization and scale of artificial intelligence.

>>>>

Another question: Will artificial intelligence surpass humanity?

In fact, artificial intelligence and human intelligence are two different ways.

Successful artificial intelligence embodies mechanical or engineering aesthetics, and human intelligence is a natural aesthetic or a biological, philosophical beauty.

So, my point is:

1 Under the specific task of limited rules, it is only a matter of time before the machine exceeds the human level.

For example, after solving math problems, playing chess, and playing poker, when the rules are clear, the results will be determined, so don't be surprised.

2 In an unstructured scenario, the machine still does not break through humans even with simple emotion recognition problems.

For example, is the girl happy or sad? Such a program is in fact impossible to write.

In short, in the foreseeable future, human-robot collaboration is the true direction. Humans should not be afraid of artificial intelligence or machine learning. What should be really worried about?

It is worth noting that if people don’t arrive at the same time as machines, they become cold or completely human.

summary

Finally, I would like to say a few words for those who are artificial intelligence practitioners, those who are silent, those who do not speak out on the Internet, and who are unlikely to have corresponding operational interests:

Due to the driving forces of capital, the weakness of human nature, or due to other factors, we have seen artificial intelligence being sought after. However, because it is not matched with reality, the result is a gap between expectation and reality. Who is the last victim? What?

Damage to the discipline. I am very sad.

Because in the first two artificial intelligence troughs, there were actually a lot of geniuses and very very honest and pragmatic researchers. Because of this misunderstanding, they did not get funding, support, and understanding. They finally had to leave the industry.

This phenomenon is happening.

We went to artificially create a lie, and after the last time the lie was punctured, it was the honest people who were working to take punishment. This is very unfair to R&D personnel.

Therefore, I hope everyone will treat artificial intelligence more rationally and purely.

Stainless Steel Stamping Belt,Industry Stainless Steel Conveyor Belt,Stainless Steel Strips For Furniture,Portable Food Industry Conveyor Belt

ShenZhen Haofa Metal Precision Parts Technology Co., Ltd. , https://www.haofametals.com