Which sensors are commonly used for obstacle avoidance

Whether it is to navigate planning or avoid obstacles, sensing the surrounding environment information is the first step. In terms of obstacle avoidance, mobile robots need to obtain real-time obstacle information, including size, shape and position, through sensors. There are various sensors for obstacle avoidance, each with different principles and characteristics. At present, there are mainly visual sensors, laser sensors, infrared sensors, ultrasonic sensors, and the like. Below I will briefly introduce the basic working principle of these kinds of sensors.

Ultrasonic

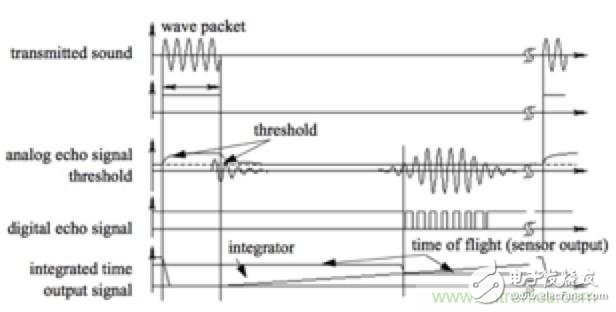

The basic principle of an ultrasonic sensor is to measure the flight time of an ultrasonic wave. The distance is measured by d=vt/2, where d is the distance, v is the speed of sound, and t is the time of flight. Since the speed of ultrasonic waves in air is related to temperature and humidity, changes in temperature and humidity and other factors need to be taken into account in more accurate measurements.

The above picture is an illustration of the ultrasonic sensor signal. A piezoelectric wave or an electrostatic transmitter generates an ultrasonic pulse having a frequency of several tens of kHz to form a wave packet, and the system detects a reverse sound wave above a certain threshold, and uses the measured flight time to calculate the distance after detection. Ultrasonic sensors generally have a short working distance, and the common effective detection distance is several meters, but there is a minimum detection dead zone of several tens of millimeters. Ultrasonic sensors are commonly used in mobile robots due to their low cost, simple implementation method and mature technology. Ultrasonic sensors also have some drawbacks. First look at the picture below.

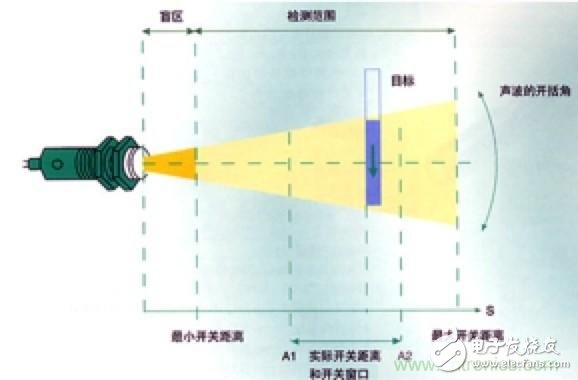

Because the sound is conical, the distance we actually measured is not a point, but the distance of the closest object within a range of cone angles.

In addition, the measurement period of the ultrasonic wave is long, for example, an object of about 3 meters, and it takes about 20 ms for the sound wave to transmit such a long distance. Furthermore, the reflection or attraction of sound waves by different materials is different, and there are many ultrasonic sensors that may interfere with each other, which are all considered in the actual application process.

Infrared

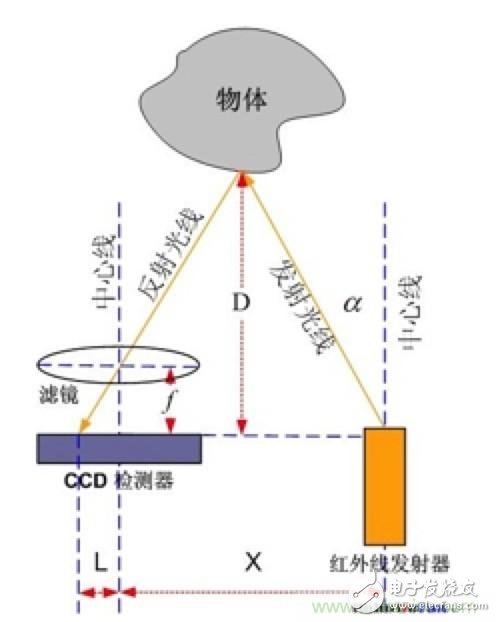

The general infrared ranging is based on the principle of triangulation. The infrared emitter emits an infrared beam at a certain angle. After encountering the object, the light will be reversed. After the reflected light is detected, the object distance D can be calculated through the geometric triangle relationship of the structure.

When the distance of D is close enough, the L value in the above figure will be quite large. If the detection range of the CCD is exceeded, then the object is not close, but the sensor is not visible. When the distance D of the object is large, the L value will be small, and the measurement accuracy will be deteriorated. Therefore, the common infrared sensor measurement distance is relatively close, less than the ultrasonic wave, and the remote distance measurement also has a minimum distance limit. In addition, for transparent or near-black objects, the infrared sensor cannot detect the distance. But infrared sensors have a higher bandwidth than ultrasound.

laser

The common lidar is based on time-of-flight (ToF, TIme of flight). The distance d=ct/2 is measured by measuring the flight time of the laser, similar to the ultrasonic ranging formula mentioned above, where d is the distance, c It is the speed of light, and t is the time interval from transmission to reception. A laser radar includes a transmitter and a receiver, the transmitter illuminates the target with a laser, and the receiver receives the reversed light wave. The mechanical lidar consists of a mechanical mechanism with a mirror that rotates so that the beam can cover a plane so that we can measure the distance information on a plane.

There are also different methods for measuring flight time, such as using a pulsed laser, and then directly measuring the time taken, similar to the ultrasonic scheme described above, but because the speed of light is much higher than the speed of sound, it requires very high precision time measuring components, so it is very expensive; Another type of continuous laser wave that emits frequency modulation measures time by measuring the difference frequency between the received reflected waves.

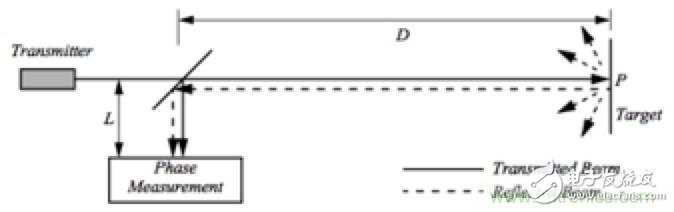

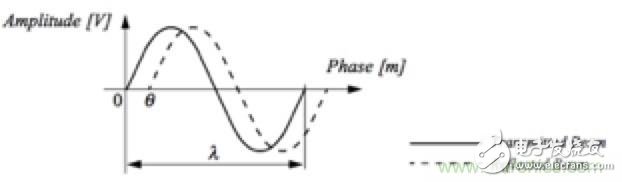

Figure 1

Figure II

A simpler solution is to measure the phase shift of the reflected light. The sensor emits a certain amount of modulated light at a known frequency and measures the phase shift between the transmitted and inverted signals, as shown in Figure 1. The wavelength of the modulated signal is lamda=c/f, where c is the speed of light and f is the modulation frequency. After measuring the phase shift difference between the transmitted and reflected beams, the distance can be calculated from lamda*theta/4pi, as shown in Figure 2 above.

Lidar's measuring distance can reach tens of meters or even hundreds of meters, the angular resolution is high, usually can reach a few tenths of a degree, and the accuracy of ranging is also high. However, the confidence of the measured distance is inversely proportional to the square of the amplitude of the received signal. Therefore, blackbody or long-distance object distance measurements are not as good as bright, close-range objects. And, for transparent materials, such as glass, Lidar can't do anything about it. Also, due to the complexity of the structure and the high cost of the device, the cost of the laser radar is also high.

Some low-end lidars use a triangulation approach for ranging. However, their range will be limited, usually within a few meters, and the accuracy is relatively low, but the SLAM used in the indoor low-speed environment or in the outdoor environment is only used to avoid obstacles, the effect is good.

Vision

There are many common computer vision solutions, such as binocular vision, TOF-based depth cameras, and structured light-based depth cameras. The depth camera can obtain both RGB and depth maps, whether based on TOF or structured light, which is less effective in outdoor glare environments because they all require active illumination. Like a structured light-based depth camera, the emitted light will generate relatively random but fixed speckle patterns. After these spots are struck on the object, the position captured by the camera is different because of the distance from the camera. The distance between the spot of the captured image and the standard pattern of the calibration is at different positions, and the distance between the object and the camera can be calculated by using parameters such as the position of the camera and the size of the sensor. Our current E-robot is mainly working in an outdoor environment, and the active light source is greatly affected by conditions such as sunlight. Therefore, the passive vision scheme of binocular vision is more suitable, so the visual scheme we adopt is based on binocular vision. of.

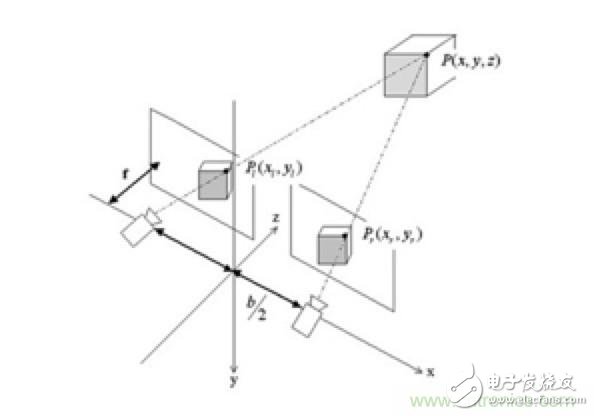

The distance measurement of binocular vision is also a triangulation method. Because the positions of the two cameras are different, like the eyes of our people, the objects seen are different. The same point P seen by the two cameras will have different pixel positions when imaging, and the distance of this point can be measured by triangulation. Different from the structured light method, the points of structured light calculation are actively issued and known, and the points calculated by the binocular algorithm are generally image features captured by the algorithm, such as SIFT or SURF features, etc. The feature is calculated as a sparse graph.

To do a good obstacle avoidance, sparse graphs are still not enough. What we need to obtain is a dense point cloud map, the depth information of the entire scene. Densely matched algorithms can be roughly divided into two categories, local algorithms and global algorithms. Local algorithms use pixel-local information to calculate their depth, while global algorithms use all the information in the image for calculation. In general, local algorithms are faster, but global algorithms are more accurate.

There are many different ways to implement these two algorithms. Through their output we can estimate the depth information in the entire scene. This depth information can help us find the walkable areas and obstacles in the map scene. The whole output is similar to the 3D point cloud image of the laser radar output, but the information is more abundant than that of the laser. Compared with the laser, the advantage is that the price is much lower, the disadvantages are more obvious, the measurement accuracy is worse, and the calculation ability is The requirements are also much higher. Of course, this precision difference is relative, it is completely sufficient in the practical process, and our current algorithm can be run in real time on our platforms NVIDIA TK1 and TX1.

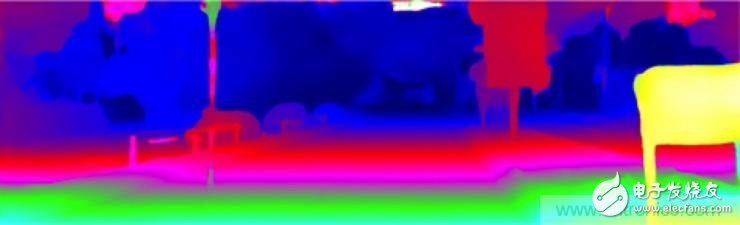

KITTI captured map

The depth map of the actual output, different colors represent different distances

In the actual application process, we read the continuous video frame stream from the camera. We can also estimate the motion of the target objects in the scene through these frames, build motion models for them, estimate and predict their motion direction, Movement speed, which is very useful for our actual walking and obstacle avoidance planning.

The above are the most common types of sensors, each with its own advantages and disadvantages. In the actual application process, a variety of different sensors are generally used in a comprehensive configuration to maximize the guarantee in a variety of different applications and environmental conditions. Under the robot, the robot can correctly perceive the obstacle information. Our company's E-robot robot's obstacle avoidance scheme is based on binocular vision, and then assists with a variety of other sensors to ensure that obstacles within the 360-degree spatial range of the robot can be effectively detected to ensure robot walking. safety.

Principles of common obstacle avoidance algorithms

Before talking about the obstacle avoidance algorithm, we assume that the robot already has a navigation planning algorithm to plan its own motion and walk according to the planned path. The task of the obstacle avoidance algorithm is to update the target trajectory in real time and bypass the obstacle when the robot performs the normal walking task because the sensor input senses the existence of the obstacle.

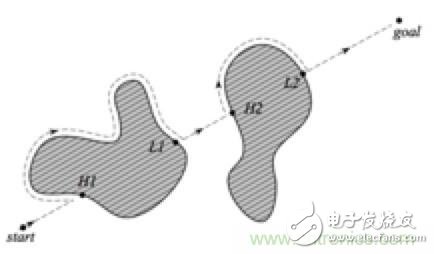

Bug algorithm knows that the user has no square representation

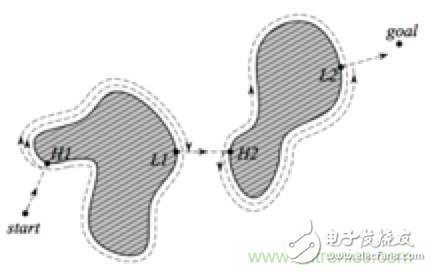

The Bug algorithm should be the simplest obstacle avoidance algorithm. Its basic idea is to walk around the detected obstacle and find it around the obstacle. There are many variants of the Bug algorithm, such as the Bug1 algorithm, where the robot first completely surrounds the object and then leaves the point that is the shortest distance from the target. The efficiency of the Bug1 algorithm is low, but it guarantees that the robot will reach its goal.

Bug1 algorithm example

In the improved Bug2 algorithm, the robot will track the contour of the object at the beginning, but will not completely surround the object. When the robot can directly move to the target, it can be directly separated from the obstacle, which can achieve a relatively short robot walking total. path.

Bug2 algorithm example

In addition, there are many other variants of the Bug algorithm, such as the Tangent Bug algorithm and so on. In many simple scenarios, the Bug algorithm is relatively easy and convenient to implement, but they do not take into account the dynamics of the robot and so on, so it is not so reliable and useful in more complex real-world environments.

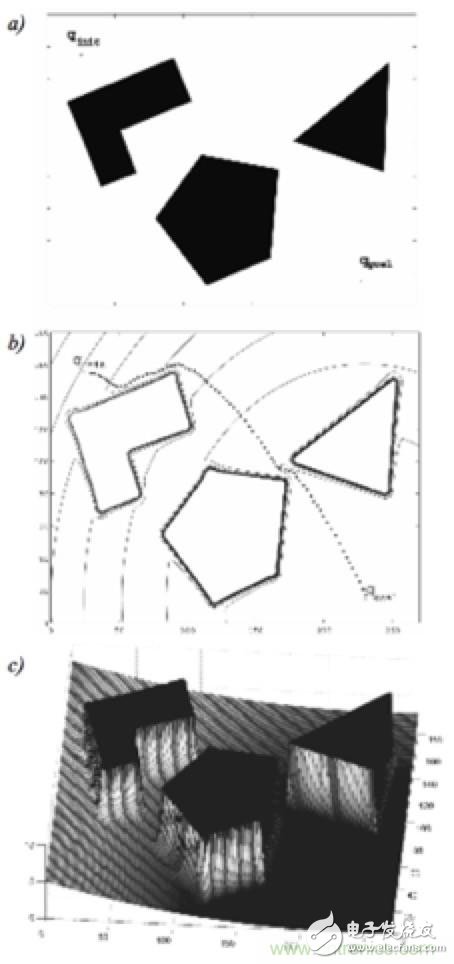

Potential field method (PFM)

In fact, the potential field method can be used not only to avoid obstacles, but also to plan the path. The potential field method treats the robot at a point under the potential field and moves with the potential field. The target appears as a low valley value, that is, the attraction to the robot, and the obstacle plays a peak in the potential field, that is, the repulsive force, all These forces are added to the robot to smoothly guide the robot towards the target while avoiding collisions with known obstacles. When a new obstacle is detected during the robot's movement, the potential field needs to be updated and re-planned.

The above figure is a typical example of the potential field. The upper left corner of the top graph a is the starting point, the lower right corner is the target point, and the middle three blocks are obstacles. The middle figure b is the equipotential bitmap. Each continuous line in the figure represents a line of equipotential position, and then the dotted line indicates a path planned in the entire potential field. Our robot is along the path. Walking in the direction that the situation is pointing, you can see that it will bypass this relatively high obstacle. The bottom picture, the attraction of our entire target, and the potential field effect of the repulsion generated by all our obstacles, can be seen from the starting point of the upper left corner of the robot, all the way down the direction of the potential field. The final target point, and each obstacle field shows a very high platform, so the path it plans will not go from this obstacle.

An extended method is added to the basic potential field? The other two potential fields: the transport potential field and the task potential field. They additionally consider the interaction between the state of the robot itself, the speed of movement, and the obstacles.

The rotating potential field takes into account the relative orientation of the obstacle and the robot. When the robot walks toward the obstacle, the repulsion is increased, and when walking parallel to the object, the repulsion is reduced because it is obvious that it does not hit the obstacle. The mission potential field eliminates obstacles that do not affect recent potential energy based on current robot speeds, thus allowing for a smoother trajectory.

There are also other improved methods such as the harmonic potential field method. The potential field method has many limitations in theory, such as the local minimum point problem or the turbulent problem, but the effect in the actual application process is good, and it is easier to implement.

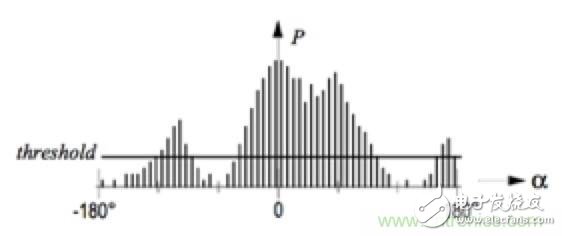

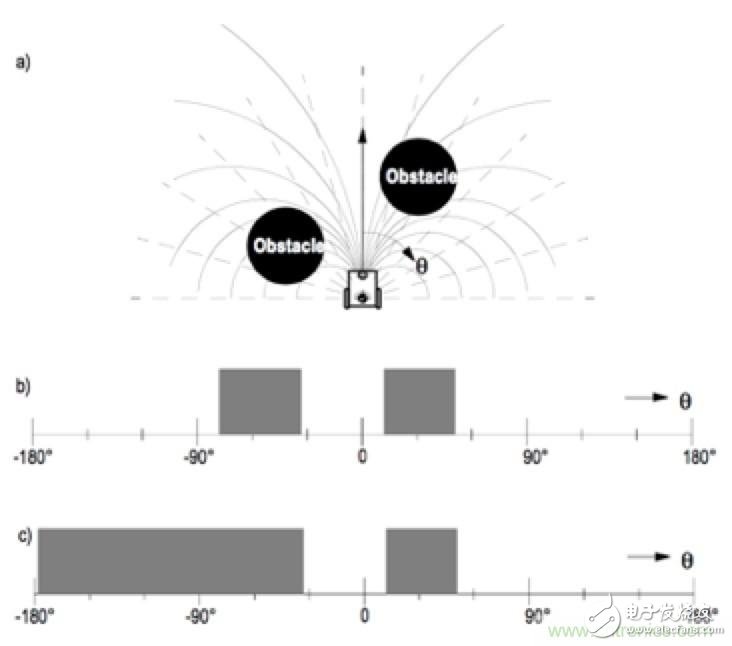

Vector field histogram (VFH)

During its execution, a local map based on the polar coordinate representation is created for the current surrounding environment of the mobile robot. This partial representation of the raster map will be updated by some recent sensor data. The polar histogram generated by the VFH algorithm is shown in the figure:

In the figure, the x-axis is the angle of the obstacle perceived by the robot, and the y-axis represents the probability p of the obstacle in this direction. In the actual application process, according to this histogram, all the gaps that allow the robot to pass through are firstly identified, and then the cost function is calculated for all the gaps, and finally the passage with the lowest cost function is selected.

The cost function is affected by three factors: the target direction, the current direction of the robot, the direction of the previous selection, and the final generated cost is the weighted value of these three factors. The robot's selection preference can be adjusted by adjusting different weights. The VFH algorithm also has other extensions and improvements. For example, in the VFH+ algorithm, the limitations of robot kinematics are considered. Due to the difference in the actual underlying motion structure, the actual motion capability of the machine is limited. For example, the structure of the car cannot be turned to the ground as desired. The VFH+ algorithm considers the blocking effect of the obstacle on the trajectory of the robot's actual motion ability, and shields the motion trajectories that are not occupied by the obstacle but cannot be reached due to their blocking. Our E-Road robot uses two-wheel differential drive motion, the movement is very flexible, and the actual application is less affected by these factors.

Specifically, you can look at this icon:

There are still many traditional obstacle avoidance methods like this. In addition, there are many other intelligent obstacle avoidance techniques, such as neural networks and fuzzy logic.

The neural network method trains the entire walking path of the robot from the initial position to the target position. When applied, the input of the neural network is the position and velocity of the previous robot and the input of the sensor, and outputs the desired next target or direction of motion. .

The core of the fuzzy logic method is the fuzzy controller, which needs to write the knowledge of the expert or the experience of the operator into multiple fuzzy logic statements to control the obstacle avoidance process of the robot. For example, such fuzzy logic: the first one, if the obstacle is detected farther in the right front, turn slightly to the left; the second, if the obstacle is detected near the right front, slow down and turn left more Angle; and so on.

What problems exist in the obstacle avoidance process?

Sensor failure

In principle, no sensor is perfect. For example, if the robot is a completely transparent glass, then the infrared, laser or visual scheme may cause the detection to fail because the light passes directly through the glass. A sensor such as an ultrasonic wave is required to detect an obstacle. Therefore, in the process of real application, we must all adopt a combination of various sensors, perform cross-validation on the data collected by different sensors, and integrate information to ensure that the robot can work stably and reliably.

In addition, there are other modes that may cause sensor failure, such as ultrasonic ranging, which generally requires an ultrasound array. If the sensors between the arrays work at the same time, they will easily interfere with each other. The light waves emitted by sensor A are reflected back and received by sensor B. The measurement results are wrong, but if they work one by one in sequence, because the sampling period of the ultrasonic sensor is relatively long, the speed of the whole acquisition will be slowed down, and the real-time obstacle avoidance will be affected. This requires the structure from the hardware to the algorithm. Must be designed to maximize sampling speed and reduce crosstalk between sensors.

For example, if a robot needs to move, it usually needs a motor and a driver. They will have capacitance compatibility problems during the work process, which may cause errors in sensor acquisition, especially analog sensors, so the implementation process The equipment such as the motor driver, the collection part of the sensor, and the power communication part should be kept isolated to ensure that the entire system can work normally.

algorithm design

In the several algorithms just mentioned, many of them are not perfected in design. Considering the kinematics and dynamics models of the entire mobile robot itself, the trajectory planned by such an algorithm may not be kinematically realized. It may be achievable in kinematics, but it is very difficult to control. For example, if the chassis of a robot is the structure of a car, it can't be turned anywhere, or even if the robot can turn in place, but If we do a big maneuver at once, our entire motor can't be executed. Therefore, in the design, we must optimize the structure and control of the robot itself. When designing the obstacle avoidance scheme, we must also consider the feasibility.

Then in the design of the entire algorithm, we have to consider whether to avoid or hurt the robot itself, in the execution of the work, obstacle avoidance is a higher priority task, even the highest task, and Self-running has the highest priority, and the control priority of the robot is also the highest, and the algorithm is implemented fast enough to meet our real-time requirements.

In short, in my opinion, obstacle avoidance can be regarded as a special case of robot in autonomous navigation planning to a certain extent. Compared with the overall global navigation, it requires higher real-time and reliability. Then, Locality and dynamics are a feature of it. This is something we must pay attention to when designing the entire robot hardware software architecture.What are the obstacle avoidance strategies for multi-machine collaboration?

The multi-machine coordinated obstacle avoidance strategy is still a hotspot in the whole SLAM direction. In terms of obstacle avoidance, the current solution is that when two or more robots work together, each robot will Each part maintains a relative dynamic map, all robots share a relatively static map, and for a single robot, they each maintain a more dynamic map so that when the two robots approach a location, they will The maintained dynamic maps are merged.

What are the advantages of this? For example, the vision can only see one direction in front. At this time, after merging with the dynamic map of the robot behind, you can see the dynamic information of the whole part before and after, and then complete the obstacle avoidance.

The key to multi-machine collaboration is that the sharing between the two partial maps is that they have a small window position on the entire relatively static global map. If the two windows may merge, they will be merged. Together, to guide the obstacle avoidance of two robots. In the specific implementation process, we must also consider the entire information transmission problem. If it is its own local map, since it is the operation of the machine, the speed is generally faster. If the two robots cooperate, it is necessary to consider the transmission. The delay, as well as the bandwidth issue.

Are there any standard test standards and indicators for obstacle avoidance?

As far as I know, there is no uniform test standard and indicator in the industry. We will consider these indicators when testing, for example, in the case of a single obstacle or multiple obstacles, obstacles are static or dynamic. How effective is it, and how well the path is actually planned, and whether the trajectory is smooth or not, in line with our perception.

Of course, this most important indicator I think should be the obstacle to failure is the success rate problem, to ensure that this obstacle avoidance is whether it is a static or dynamic object, then the object regardless of the material, for example, if it is dynamic People, what kind of clothes we wear will not affect the entire obstacle avoidance function, and what kind of influence will be caused by different environments, such as sufficient light or dim light. For obstacle avoidance, success rate is the most critical.

Ei48 Transformer,Ei48 4Ohm Audio Transformer,48 Volt Transformer,Ei48 20W Audio Line Transformer,led transformer

Guang Er Zhong(Zhaoqing)Electronics Co., Ltd , https://www.poweradapter.com.cn