Today, the company's security and risk leaders need to determine whether using artificial intelligence or machine learning in R&D, operation and maintenance, and application security testing is of real value. They must be able to realize that the application of artificial intelligence and machine learning means that the corresponding large amounts of data and talent are needed and must be able to estimate the speed, accuracy, and other potential realities of artificial intelligence security implementation.

Key challenges

Artificial Intelligence (AI) and machine learning are very popular marketing terms that make it difficult to distinguish between exaggerated marketing and value for users.

Exaggerating marketing makes artificial intelligence look like a great new technology. Faced with complex data analysis, the security requirements of data are continuously increasing. The security field is known as the perfect area for AI technology application. However, we are not sure whether AI will always help security experts and risk control experts in any situation, nor can we determine whether AI is better than traditional security methods in all scenarios.

The quality of enterprise data governance directly affects the effectiveness and quality of AI security systems. AI system vendors often do not emphasize this to users in advance, making it difficult for applications and security of AI systems to be effective.

Suggestions

To support application and data security:

Please make sure whether the security products using AI technology have faster speeds and higher accuracy than traditional products, and the degree of improvement of the results is worth the cost.

Define benchmarks for quality indicators to assess the effectiveness of AI technology for anomaly detection and safety analysis.

Please specify whether the use of AI technology may affect current business processes. Confirm whether the skills of the company's personnel can meet the requirements of the AI ​​technology application, and confirm whether the algorithm model needs the frequency of retraining and whether the tools for data organization are reliable.

Before purchasing artificial intelligence security products, use your own existing data and infrastructure to run tests, or establish a POC system for “quick trial and error†to determine the scope of impact of artificial intelligence security products.

The AI ​​security system assessment and management implementation personnel should be familiar with the type of data used by the project and the expected results, and assign them the necessary resources so that they will become an important component of your security process.

Strategic Planning and Forecasting

Now 10% of security vendors claim to be AI-driven. By 2020, 40% of manufacturers will claim to be AI-driven.

▌ Introduction

"Artificial Intelligence" is a broad term that covers a vast array of very different technologies and algorithms. Recently, AI has made great progress in deep learning, machine learning and natural language processing. AI technology has been upgraded from a niche technology field to mainstream commercial software, and has been widely used in customer support, security, predictive analysis and automated driving. And other fields.

However, due to the technology's enthusiasm, artificial intelligence has become a popular marketing term, which makes it difficult for customers without AI background knowledge to assess whether it actually uses the technology in the product, and whether it offers more than traditional technologies. Many benefits. According to Gartner's survey, from 2015 to 2016, the number of projects related to security and AI-related technologies has increased more than threefold, based on the strong interest and profitability of this new technology.

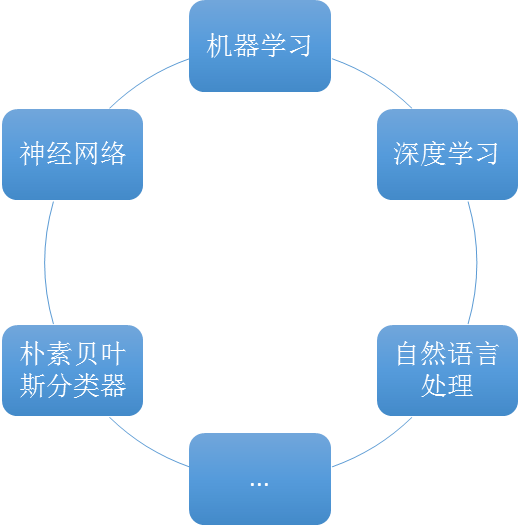

This phenomenon is more common in application security, and AI technology has become a common tool in the field of security research and development operation and maintenance, such as application security testing (AST) and network traffic analysis, security information and event management (SIEM), user and Physical Behavior Analysis (UEBA; see "Rapid Development of Security Analysis Status 2016") and more. However, like all technologies, AI technology has its limitations, and the success of implementing applications often depends on the type of outcome to reality. This article provides guidelines on how to evaluate artificial intelligence and machine learning, and helps security leaders determine whether the company can implement AI effectively. The common commercially available AI technology is shown in Figure 1.

Figure 1 Common AI Business Technologies

Analysis

Identify the true capabilities of AI suppliers and determine if they have significant improvements to existing systems

The head of corporate security should take a careful look at product promotion based on AI technology. Although AI is a terrific marketing term, it is only valid when dealing with certain security issues that often have a large number of easily understood data sets.

False alarms in static ASTs are a good example. Even for medium-sized projects, security testing tools generate large amounts of data that are easy to understand, and data feedback can be obtained from human experts. The accuracy of the output can be evaluated according to existing processes, and the success of AI technology. Application makes sense. To identify whether an AI system is applicable in security, one or more of the following features should be present:

Automation - This is the most basic feature of AI tools. If used properly, the AI ​​tool is a capacity multiplier that can perform the work of dozens or hundreds of experts in a unified manner and can quickly and reliably deploy multiple tasks in multiple directions. Trained artificial intelligence can be processed several orders of magnitude faster than expert teams. However, if the resources cannot be effectively used, the sloppy automation results cannot catch up with the results obtained by traditional technologies.

Accuracy - This is the greatest advantage of AI tools. It has expert-level security features that can achieve better accuracy than humans and even humans. The skills of multiple experts can be combined into one analytical skill and based on the technology used, generate new insights into existing issues.

Early Warning - Human experts may eventually feel tired of code and test results or ignore some details, while machine learning can maintain an objectivity level indefinitely. Throughout product development, the progress of experts in the expansion of expertise is always difficult and expensive, and AI technology is relatively easy and inexpensive.

The correlation between multiple parameters - machine learning is to train a large amount of data and keep learning in the production process, therefore, it is possible to relate unrelated functions to provide effective data mining for humans, and these are for humans All are difficult and time-consuming tasks.

For example, IBM provides extended analysis of SAST results to reduce false positives. This type of analysis can correlate multiple vulnerabilities so that they can be repaired using a single method. IBM's Intelligent Analysis (IFA) eliminates the consequences of false positives, noise, or inefficient exploration. You can also focus on filtered results and create "repair groups" to analyze multiple vulnerability trails. This feature has also been gradually adopted by other SAST providers.

Assess the efficacy of AI technology for anomaly detection and safety analysis by defining quality metrics

"Any fully manipulated presentation cannot judge the true effect of the product."

——Executive Director of Security Development at a Tough Financial Services Company

Many vendors’ promotion of AI technology is not achievable, but for actual results, one must not believe it or believe it. The key lies in the objective data that supports this propaganda effect. Recently, technological breakthroughs in machine learning and deep neural networks have greatly increased the speed and accuracy of the results, so perhaps those exaggerated effects are true, but sales may be bragging.

We must maintain a clear understanding that new technologies are not necessarily better methods of detection. For example, anomaly detection itself can be achieved by many basic techniques:

Feature fingerprinting technology, which is suitable for attackers with known conditions.

Pattern matching and policy techniques apply to attack technology as known conditions.

The white list applies to conditions where its expected behavior is known (and consistent).

Based on this, it is crucial to develop an evaluation framework that is independent of the technology itself and accurately assess the pros and cons of new technologies and existing solutions.

In the evaluation process of technological advancement, the first objective is to define the quality indicators for evaluating the expected results. These quality indicators must be independent of the technical implementation path. Take two important machine learning applications as examples: anomaly detection (used to increase detection rate) and security analysis automation (used to reduce false positives, aggregate old events into new events and generate recommendation strategies):

The quality indicators for anomaly detection include the scope of deployment ("Can it see everything?"), detection rates, and false alarm rates.

The quality indicators for safety analysis automation include the average time to resolve the incident or the percentage of incidents resolved (in contrast to the original baseline conditions).

Of course it is difficult to obtain a fair assessment, especially to assess different technologies at different times. Therefore, the quality indicators should be further rationalized to ensure that the assessment results are more fair.

When evaluating techniques promoted by suppliers, ask the following questions:

What percentage of the total problems are solved by AI technology? Can AI technology handle the actual key issues?

Does the system continue to learn, or does it require regular "learning step by step"?

What is the origin and scope of training data provided by suppliers? How much similarity does it have with the company's data?

Is your result from a neutral third party or a case study?

How much better are these results than the traditional technologies we are using now?

Is this an increase in core security or external security requirements?

In order to successfully use AI products, what changes do I need to make to existing employees (for example, do I need to hire a data scientist)?

Does the supplier achieve "network effect"? (Can share results with other customers)

What data does the supplier send to his server? (This issue involves privacy protection)

If possible, ask existing customers about the cases that have been used, especially those products where the supplier does not make money. In addition, pay attention to the implementation costs of the entire product, especially in consulting and data governance.

For example, a medium-sized insurance company is evaluating a static analysis tool with "AI components." It is understood that the accuracy of the tool does not appear to be better than traditional non-AI technology as part of the complete results management. After investigation, it was found that the AI ​​technology was not used in the analysis unit at all, and only the unrelated help system used AI technology.

Process changes and new requirements for employees' skills using AI technology

The results obtained from machine learning or other AI techniques depend to a large extent on the data used for training. If the training data is of poor quality, the most advanced mathematical algorithms will not help you achieve your goals. The "garbage into garbage" principle is very suitable for describing this situation. In other words, if the system input is not good, the output will not be good. Therefore, the lack of data governance is often the key factor leading to failure. In order to achieve effective results, you may need to adjust the process and staffing.

Therefore, when evaluating AI products, ask the supplier the following questions:

How long does the model need to be retrained, is this feature your responsibility or our owner's responsibility?

What tools are available for data governance and how much data do you need to train?

Do I need to hire a data scientist or can I use an existing employee instead?

When the final result loses its accuracy, how difficult is it to identify and remove bad data from the product?

If accurate results deteriorate over time, what do I need to do?

Does the product support continuous training and adjustment?

Establish a run pilot or POC before purchase and use your own data and test architecture for quick verification

The person in charge of safety must first establish a data run pilot before purchasing AI products, use the company's existing data for verification, or establish a POC for rapid verification. This approach helps to quickly understand the product's problems and whether it needs to change the existing process, whether it requires the use of professional and technical personnel and the use of this technology will bring benefits. This will also help deepen the understanding of the unit's data data governance needs and determine the speed and accuracy of the technology. Of course, the evaluation indicators must be quantifiable indicators (such as accuracy, speed, quality of results, etc.).

Before the pilot, it is necessary to set clear quantifiable goals. If you want your team to spend less time tracking down false positives, then quantified metrics should be the accuracy of results, the speed of analysis, or the period from misidentification to final repair.

Assign someone to evaluate and manage AI, making it an important component of your security process

With the increase of data and product complexity, artificial intelligence has gradually become the basic technology in security products. However, with the acquisition or divestment of companies, the supplier market will also change rapidly. It is an inevitable trend to assign a dedicated security officer to plan and manage the internal AI project. Professionals must be familiar with the type of data your project uses, and the type of results you expect.

You may consider hiring a data scientist who needs the ability to assess the effectiveness of AI technology and expertise in data governance. It is best to be in data or computer science. Such talent requires specialized knowledge in machine learning, deep learning, neural networks or other AI technologies. For a single project or a small company, this task can be undertaken by a dedicated person or a part-time consultant can be hired.

For large companies with multiple projects, this task should be detailed to several people or a small team. The concentration of responsibility for AI projects reduces the repetition rate of tasks and improves the consistency of work. The AI ​​team's responsibilities should include: assessment of AI products, management of corporate data, management of AI lifecycles, and development of AI plans and policies throughout the company.

At the corporate level, this setup can avoid splitting expertise in different departments, wasting staff, or creating bottlenecks in expertise. As artificial intelligence becomes a common technology, this setup will make your business more flexible, use the latest and most reliable technology, and enable you to quickly purchase new products and plan your company's data warehouse.

For the last three points, here's a good example to illustrate: A large Wall Street financial services company wants to speed up the security scan of its applications as part of its R&D operations upgrade, so it purchased machine learning-based Safety products. The product can find high-risk defects and unstable compilation problems. In theory, the tool should be very effective; however, the company spent three months and did not obtain the expected accuracy.

The reason is that although the employees responsible for the pilot project participated in the AI ​​project, they did not use the product's direct experience and did not receive artificial intelligence training. So, the company hired a data expert as a consultant and he soon discovered that the training data was not properly managed. Feedback data results were not screened before retesting (ie, human feedback was placed in the loop), but all test data was simply dumped into a large, undifferentiated data pool to train. Although some machine-learning products can understand this unclear feedback data, the products tested by the company cannot. At the end of the story, the company employed the data scientist full-time to manage all AI projects across the company and achieved good results.

Vocabulary terms

Gartner Recommended Reading

"Hype Cyclefor Smart Machines, 2016"

"Artificial Intelligence Primer for 2017"

"Predicts 2017: Artificial Intelligence"

"Top 10 Strategic Technology Trends for 2017: ArtificialIntelligence and Advanced Machine Learning"

"The Fast-Evolving State of Security Analytics, 2016"

"Top 10 Strategic Technology Trends for 2017: A Gartner TrendInsight Report" "How to Define and Use Smart Machine TermsEffectively"

evidence

1. According to 2015 Gartner customers' number of enquiries about machine learning and AI security (approximately 20 inquiries) compared to 2016 (approximately 60), and approximately 60 items from January to April 2017.

2. "Deep learning - With a lot of computing power, machines can now recognize objects and convert voice in real time, artificial intelligence eventually becomes more intelligent." MIT Technical Review.

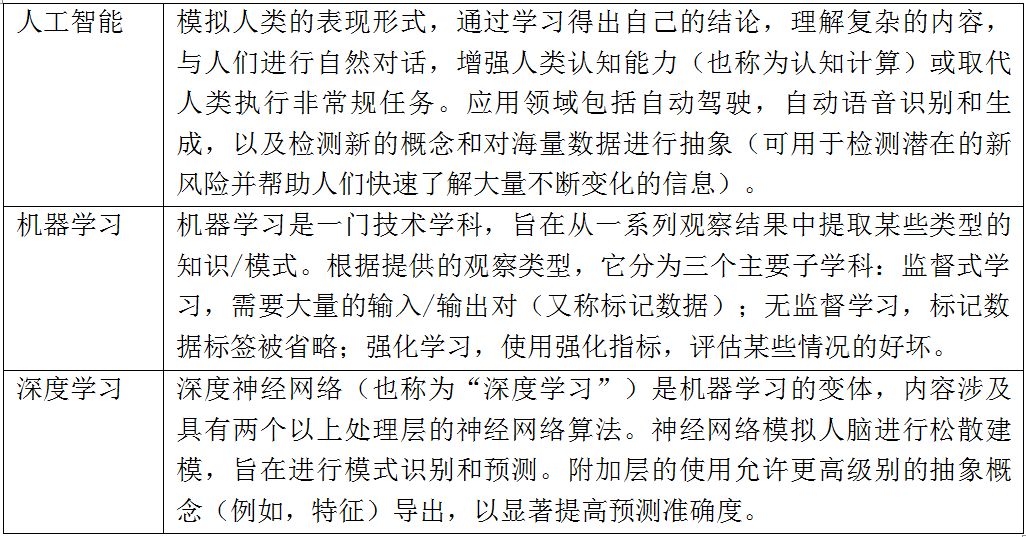

▌ Artificial Intelligence Concepts Overview

There are many ways of "artificial intelligence", each of which has certain advantages in application security. "Artificial intelligence" is a misleading label that is misused by sales. There is no universal artificial intelligence that can be thought of or applied to a variety of tasks like humans.

AI technology mainly refers to the system based on collected data, usage analysis and other observations. In other words, each method is a special kind of data analysis technology that is extremely complex, can learn from examples, and gradually mature, but focuses on a very narrow set of well-defined problems. Figure 1 lists some of the commonly used technologies that are commercially available. Each technology has unique characteristics that distinguish them from each other. Although there are differences in mathematics, there is considerable commonality in operational requirements and general principles.

The elements included in conventional AI technology are:

This product usually requires a lot of data related to the problem.

Managing data, adding, labeling, or deleting specific subsets is critical to achieving effective results.

Many people, including data scientists and field experts, are involved.

It takes weeks to months to develop a model that produces good results.

Once the training is completed, the process can be very fast and very accurate for exactly the same issues as the training corpora.

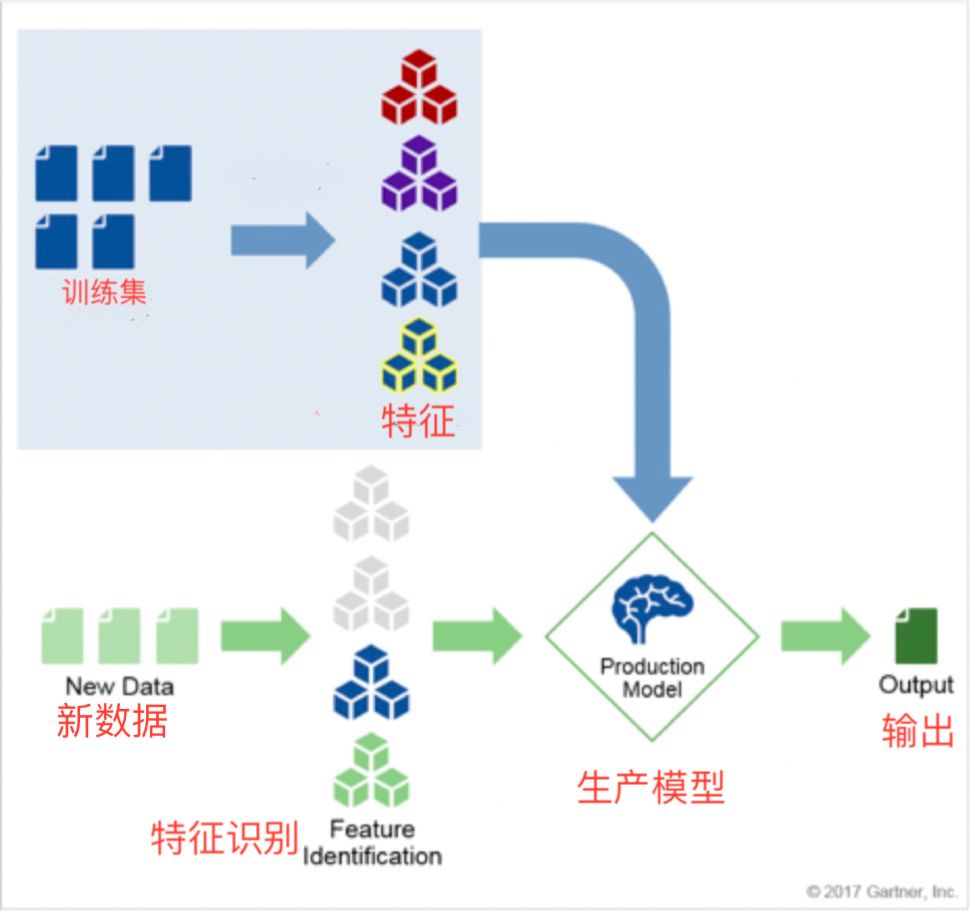

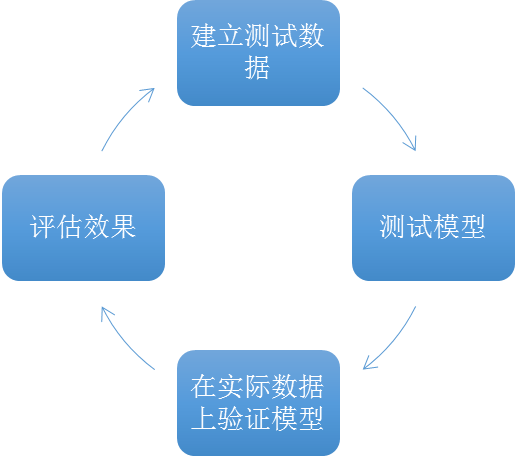

Machine learning is a well-recognized artificial intelligence discipline widely used in the security field. As a conceptual model covering many basic ideas, machine learning in the real world has many other techniques, improvements, and modifications in addition to this simple description. However, this model can be seen as an abstraction of benefits and risks in some common areas that we can use to understand the basic process for evaluating AI products in corporate security (see Figure 2).

Figure 2 The basic process for evaluating AI products

The AI ​​system starts with massive data training, or training set. These data are carefully selected from the practical problems to be solved and stored as a knowledge base. For example, in speech recognition, it is a series of texts that are read in a specific language. In application security, this may be the output of AST or a large number of representative malware.

The data should be selected (planned) by the experts to better describe the issues being studied and eventually form a recognizable product model. During the data collation, it is just as important to provide positive and negative examples for the model. The anomaly detection model requires both scenarios, but if there is too much negative sample data or if there are insufficient negative examples, the quality of the results will be reduced.

The data is normalized and clustered to determine a set of feature vectors. Using test data, you can verify that the model is valid and get the desired results. Feature vectors often contain too many feature values ​​for subdivided dimensions and are difficult to identify with any other system or human. In this way, the machine learning algorithm is trained as a prediction model. He compares the prediction result of the validation set data with the known calibration results, and can obtain the accuracy of prediction of this model. This training and verification process continues until the model is accurate enough. The final production model contains descriptions of data features, probabilities, tags, and other data.

For the self-evolving model, when the model is applied to the real world, new data is continuously input, feature vectors are continuously extracted and applied to the product model, and the model is updated through continuous training.

When applied to AST, the output of the SAST tool can be entered into a trained system to detect false positives. The output will then become a list of false positives (or a list of results that have filtered out false positives) within a certain confidence interval. To improve the results, you can check the output, identify new false positives and feed them back into the training set, and calculate the new model. As this cycle continues, new information is incorporated into the prediction algorithm and can ideally improve over time (see Figure 3).

image 3

in accordance of the Voltage range of the inverter, the PV boards can be installed in series to meet the input voltage of inveter, and several such series to be installed in parallel, to input into the combiner. The current goes through lightenling protection and breaker equipment, then ouput for connection with inverter. We strongly suggest the users to follow all the necessery safety regulation, take all the safefy masures mentioned in the manual, to lower the possibility of danger, make sure of the personal safety.

PV Combiner Box 8in1outs,PV Combiner Box 16 In1outs,PV Combiner Box 30 In1outs,PV Combiner Box

Jinan Xinyuhua Energy Technology Co.,Ltd , https://www.xyhenergy.com